Blogmarks

the risk of AI side quests

https://bsky.app/profile/carnage4life.bsky.social/post/3mfytrcpux22qI've been in meetings where I've been asked to imagine a near-future in which members of the team who aren't currently producing code (e.g. project managers, C-suite, support team) are able to prompt an LLM blackbox with feature requests. Each of those requests will process in the background and eventually produce a preview environment for the prompter to look at and a PR to handoff to a software developer.

One of the assumptions baked in to the idea of this workflow is that there are all these well-defined, high-priority issues just sitting in the project management software waiting to be worked. Maybe that is the case in some orgs, however in my experience, the majority of teams and projects I've worked on don't have this. The backlog is a place where "nice-to-have" improvements and half-backed ideas collect dust and lose proximity to the state of the software system.

there’s a risk of AI side quests distracting from doubling down on the main one.

My hard-earned intuition for what works well with various LLM models and coding agents combined with my general expertise in software engineering combined with my knowledge of the specific codebase(s) are what allow me to get strong results from LLM tooling.

I suspect in a lot of cases I'd be tossing out the initial "engineer-out-of-the-loop" attempt and re-prompting from scratch, all while trying to keep tabs on the main quest work I have in progress.

Andrej Karpathy wrote a post recently that gets at why I think "asking an LLM to build that feature from the backlog" is not as straightforward as it seems:

It’s not perfect, it needs high-level direction, judgement, taste, oversight, iteration and hints and ideas. It works a lot better in some scenarios than others (e.g. especially for tasks that are well-specified and where you can verify/test functionality). The key is to build intuition to decompose the task just right to hand off the parts that work and help out around the edges.

I'd like to coin the term "AI-assisted Backlog Resurrection", but I don't think it's going to catch on.

Your codebase is NOT ready for AI

https://www.youtube.com/watch?v=uC44zFz7JSMTwo things:

- Coding agents operating on your codebase are much more powerful than a disconnected LLM chat session where you try to manually give whatever context you think is important.

- Many of our decades old (and newer too) software engineering practices have directly useful benefits to AI-assisted coding.

Your codebase, way more than the prompt that you use, way more than your AGENTS.md file, is the biggest influence on AI's output.

Use DDD's Bounded Context or A Philosophy of Software Design's Deep Modules to create clear boxes around functionality with interfaces on top.

Design your codebase for progressive disclosure of complexity.

real-world-rails: 200+ production open source Rails apps & engines in one repo

https://github.com/steveclarke/real-world-railsThis is an awesome resource. I've typically had a handful of open-source Rails apps in the back of my mind that I sometimes remember to go check out, but I've never seen this many in one place. What a great place to learn from, observe patterns, and borrow ideas.

I heard about this from this tweet:

I have 200+ production Rails codebases on my local disk. Discourse, GitLab, Mastodon, and a ton of others — all as git submodules in one repo. I've been referencing it for years.

For most of that time it meant a lot of manual grepping and reading file after file. Valuable but tedious. You had to be really motivated to sit there and read through that much source code.

This past year, with agentic coding, everything changed. Now I just ask questions and the agent searches all 200+ apps for me. "What are the different approaches to PDF generation?" "Compare background job patterns across these codebases." What used to take hours of reading takes a single prompt.

The original repo hadn't been updated in two years and I was using it enough that I figured I should fork it and bring it forward. So I did:

- Updated all 200+ submodules to latest

- Added Gumroad, @dhh's Upright, Fizzy, and Campfire

- Stripped out old Ruby tooling (agents do this better now)

- Added an installable agent skill

- Weekly automated updates

If you're building with Rails, clone this and point your agent at it. If you know of apps that should be in here, open an issue or PR.

< github link >

PS: Hat tip to Eliot Sykes for the original repo.

Writing code is cheap now

https://simonwillison.net/guides/agentic-engineering-patterns/code-is-cheap/The math has shifted, in some cases, significantly.

Coding agents dramatically drop the cost of typing code into the computer, which disrupts so many of our existing personal and organizational intuitions about which trade-offs make sense.

That prototype, that bug fix, that nice-to-have -- those things that tend to get pushed off over and over because more urgent things are taking your time -- are suddenly viable in a lot of cases with an LLM coding agent. Equipped with a paragraph of detail and using something like Cursor or Claude Code, we can hand off these back-burner tasks, iterate on the idea in minutes instead of hours, and have at the very least an MVP if not a ready-to-ship implementation. All with a relatively minimal interruption to the main task at hand.

While we're trying to catch up to what is possible here, all the different processes our organizations and engineering teams have surrounding how we go from feature idea to shipped and supported implementation of said feature have some catching up to do as well. In some cases, these processes are meant to slow things down, as a way of managing risk. These processes are in opposition to the mandate to using AI agents to ship faster. What are organizations going to do with this contradiction? That's an open question.

I also like that as part of this series Simon is putting forth the term Agentic Engineering as the other side of the spectrum from vibe coding when it comes to using LLMs to write code.

Ladybird adopts Rust, with help from AI

https://ladybird.org/posts/adopting-rust/I like hearing about other people's AI coding workflows, especially if they are demonstrating concrete success on a complex task. Porting a browser engine from C++ to Rust in two weeks with no regressions is impressive. This is a great example of human heavily in the loop -- using knowledge of the system to pick what parts of the codebase to port and when.

I used Claude Code and Codex for the translation. This was human-directed, not autonomous code generation. I decided what to port, in what order, and what the Rust code should look like. It was hundreds of small prompts, steering the agents where things needed to go. After the initial translation, I ran multiple passes of adversarial review, asking different models to analyze the code for mistakes and bad patterns.

Google CodeJam problem archive

https://github.com/google/coding-competitions-archiveBack in college a couple years in a row I participated in Google CodeJam. This felt like a fun next-level challenge to the ACM coding competitions that I participated in with a team through my university. Anyway, I never made it that far into the CodeJam competitions because I wasn't on the level of a lot of the leet coders that rolled up to that. It was always exciting to give it a go though. Solving puzzles and getting to use code to do that will always be fun.

The linked repo is an archive of the problems across all the years and phases of the Google CodeJam competition.

The Claude C Compiler: What It Reveals About the Future of Software

https://www.modular.com/blog/the-claude-c-compiler-what-it-reveals-about-the-future-of-softwareCCC shows that AI systems can internalize the textbook knowledge of a field and apply it coherently at scale. AI can now reliably operate within established engineering practice. This is a genuine milestone that removes much of the drudgery of repetition and allows engineers to start closer to the state of the art.

Right now, where LLM coding agents can do there best work is in codebase where a solid foundation, clear conventions, and robust abstractions and patterns have all been well-established.

As writing code is becoming easier, designing software becomes more important than ever. As custom software becomes cheaper to create, the real challenge becomes choosing the right problems and managing the resulting complexity. I also see big open questions about who is going to maintain all this software.

Managing the complexity of large software systems has always been what makes software engineering challenging. While LLMs handle the “drudgery” of actually writing the code, are they simultaneously exacerbating the challenge of managing the complexity? If so, how do we, as “conductors” of coding agents, reign in that expanding complexity?

microgpt

http://karpathy.github.io/2026/02/12/microgpt/Andrej Karpathy’s latest blog post (he doesn’t post very often, so you wanna check it out when he does) presents an implementation of a GPT in 200 lines of python with no dependencies.

This file contains the full algorithmic content of what is needed: dataset of documents, tokenizer, autograd engine, a GPT-2-like neural network architecture, the Adam optimizer, training loop, and inference loop. Everything else is just efficiency.

turbocommit: Automatically commit after every turn with Claude Code

https://github.com/searlsco/turbocommitFrom Justin Searls:

It's been a weird experience enabling turbocommit on my repos and watching it do a better job titling commits than I ever do. That it preserves my agent transcripts in the commit message alongside code changes is really nice!

In particular a tool like this feels like a win for engineering organizations that cannot convince all their developers to write meaningful and useful commit messages. Instead of, "fixed bug", you get the context that was embedded in the prompt. Whatever prompt and subsequent convo was needed to give sufficient context to the LLM will be captured as a version control artifact.

Even as I tend to put effort into my commit messages, the process of summarizing what it took to get to the fix is fairly lossy. Automatically capturing all the details that I think will be useful to the LLM in commit messages helps preserve useful detail.

This also plays into this idea of context synergy that I've been thinking a lot about.

Context Synergy

The idea of context synergy is that I want to produce high-context notes that can be leveraged in multiple ways. For instance, if I'm typing notes into logseq detailing a TODO for my current feature, I've been working to shift my phrasing to directly actionable notes instead of low-fidelity placeholder. I want that TODO from my notes to be something I can directly paste to an LLM. When I start writing my notes in this way, I find myself writing in an active voice. Those items can make there way into PR descriptions and project management issue descriptions.

Notes written in this way are useful to me, to an LLM, and to team members and stakeholders who are reading my issues and PRs.

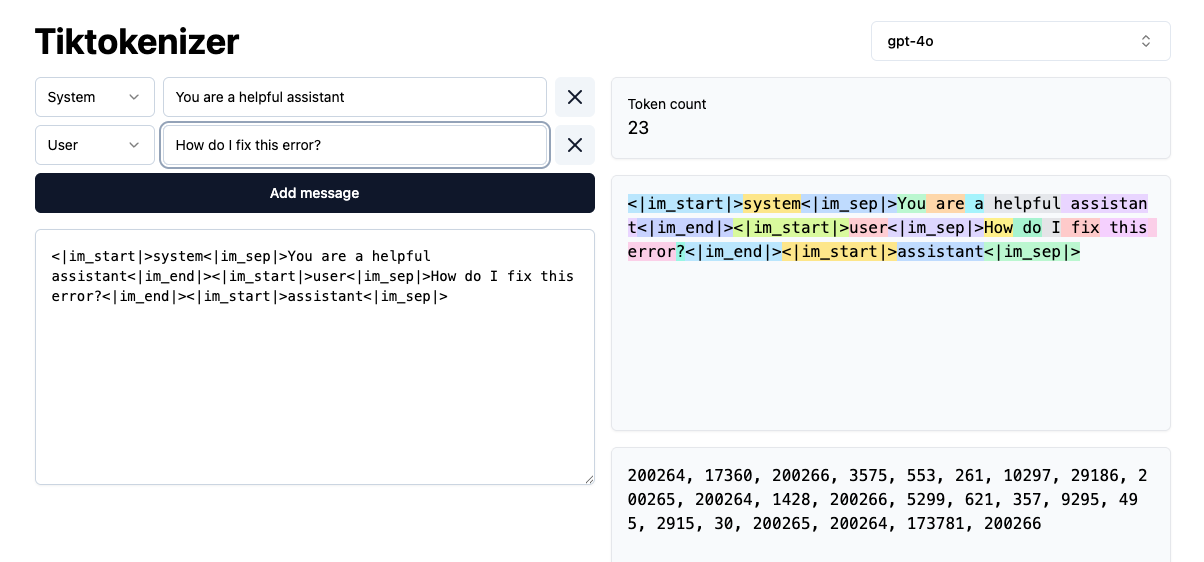

Tiktokenizer: visualize LLM prompt tokenization

https://tiktokenizer.vercel.app/Using OpenAI's BPE tokenizer (tiktoken), this app shows a visualization of how different models will tokenize prompts (system and user input). If you've wondered what it means that a model has a 100k token context window, these are the tokens that are being counted.

They vary from model to model because each model is using different vocabulary sizes as they try to optimize for their training corpus and overall inference capabilities. A smaller vocab size means smaller tokens which can be limiting for how "smart" the model can be. Larger vocab sizes can be an unlock for inference, but comes with a higher training cost and perhaps slower or more computationally expensive generation.

Agentic anxiety

https://jerodsanto.net/2026/02/agentic-anxiety/Something’s different this time, and I can say confidently this is the most unsure I’ve ever been about software’s future.

As Jerod puts it. It's not FOMO (fear of missing out) so much as it is FOBLB (fear of being left behind).

One fascinating part of our conversation with Steve Ruiz from tldraw started when he confessed that he feels bad going to bed without his Claudes working on something.

I think part of what is behind this is the same thing discussed in the HBR article, AI Doesn't Reduce Work--It Intensifies It, which points to this feeling that if we can do more we should be doing more.

AI Doesn’t Reduce Work—It Intensifies It

https://hbr.org/2026/02/ai-doesnt-reduce-work-it-intensifies-itThis 8-month study of 200 employees found that these employees used AI tools to work at a faster pace and take on a broader scope of work.

While this may sound like a dream come true for leaders, the changes brought about by enthusiastic AI adoption can be unsustainable, causing problems down the line. Once the excitement of experimenting fades, workers can find that their workload has quietly grown and feel stretched from juggling everything that’s suddenly on their plate. That workload creep can in turn lead to cognitive fatigue, burnout, and weakened decision-making.

Crucially,

The productivity surge enjoyed at the beginning can give way to lower quality work, turnover, and other problems.

This all rings pretty true to my own experience. I am definitely finding that I'm able to get more done with these tools, but that is, in part, because I've convinced myself that I can take on extra work because of the upfront time savings.

It is an ongoing challenge to explore how to balance all of it.

The findings from this study seem consistent with what happened with factory automation in the 20th century. Instead of reducing the workload, automation intensified the work, made it more repetitive, and increased the chance of injury. The physical stakes of AI's introduction to knowledge work is much different, but the parallels are there.

How AI assistance impacts the formation of coding skills

https://www.anthropic.com/research/AI-assistance-coding-skillsAnthropic released the results of a recent study they conducted where they warn of the impact that LLM agent use for software development tasks can have on learning and skill development. This impacts everyone, but is crucial for earlier-career developers who haven't developed as many of these skills without LLMs in the picture.

None of this means we shouldn't be using LLMs for software development tasks, but rather we have to be intentional about how we use them.

Importantly, using AI assistance didn’t guarantee a lower score. How someone used AI influenced how much information they retained. The participants who showed stronger mastery used AI assistance not just to produce code but to build comprehension while doing so—whether by asking follow-up questions, requesting explanations, or posing conceptual questions while coding independently.

And here is another excerpt from the end of the article:

Our study can be viewed as a small piece of evidence toward the value of intentional skill development with AI tools. Cognitive effort—and even getting painfully stuck—is likely important for fostering mastery. This is also a lesson that applies to how individuals choose to work with AI, and which tools they use.

Some good English word datasets

https://ntietz.com/blog/good-english-word-datasets/I remember back in college I was building a word game and I needed to find as exhaustive of an English word database as I could. I seem to remember finding the location of the system word set on the MBP I had at the time. I tried finding better resources than that, but didn't come up with much at that time.

Nicole mentions several, new-to-me sources in this post that I would make sure to check out the next time I need that sort of thing.

Falling Into The Pit of Success

https://blog.codinghorror.com/falling-into-the-pit-of-success/I think this concept extends even farther, to applications of all kinds: big, small, web, GUIs, console applications, you name it. I’ve often said that a well-designed system makes it easy to do the right things and annoying (but not impossible) to do the wrong things. If we design our applications properly, our users should be inexorably drawn into the pit of success. Some may take longer than others, but they should all get there eventually.

I believe this also applies to our codebases. How can we design our internal systems, APIs, class interfaces, domain boundaries, abstractions, design systems, etc. to make it easier for ourselves and others on the team to do the right thing and hopefully avoid doing the wrong thing.

Poor Deming never stood a chance

https://surfingcomplexity.blog/2026/02/16/poor-deming-never-stood-a-chance/A brief history of two approaches for managing change in organizations and why the OKR camp broadly won out. tl;dr: it is easier to observe and measure key results for objectives even when managers have limited bandwidth.

I particularly like the observations right at the end:

In reliability, we talk about “making the right thing easy and the wrong thing hard”, other people call this The Pit of Success. The rationale is that people will tend to do the easy thing over the hard thing. And managers are people too. But sometimes the right thing to do is the harder one, and nothing can be done about that.

Which includes a link to Falling into the Pit of Success.

The Illustrated Word2vec

https://jalammar.github.io/illustrated-word2vec/Two central ideas behind word2vec are skipgram and negative sampling -- SGNS (Skipgram with Negative Sampling).

We start with random vectors for the embeddings and then we cycle through a bunch of training steps where we compute error values for the results of each step to use as feedback to update the model parameters for all involved embeddings. This process nudges the vectors of words toward or away from others based on their similarity via the error values.

Random notes from the post:

"Continuous Bag of Words" architecture can train against text by using a sliding window that both looks some number of words back and some number of words forward in order to predict the current word. It is described in this Word2Vec paper.

Skipgram architecture is a method of training where instead of using the surrounding words as context, you look at the current word and try to guess the words around it.

In training, you go step by step looking at the mostly likely predicted word produced by your model and then producing an error vector based on what words it should have ranked higher in its prediction. That error vector is then applied to the model to improve its subsequent predictions.

Cosine Similarity is a way to measure how similar two vectors are. For 2D vectors, this would be trivially measured by computing the distance between the two points. However, vectors can be many dimensions. "The good thing is, though, that cosine_similarity still works. It works with any number of dimensions."

Build a Large Language Model (From Scratch) - Sebastian Raschka

https://www.manning.com/books/build-a-large-language-model-from-scratchA lot of examples used when building up the concepts behind LLMs use vectors somewhere in the range of 2 to 10 dimensions. The dimensionality of real-world models is much higher:

The smallest GPT-2 models (117M and 125M parameters) use an embedding size of 768 dimensions... The largest GPT-3 model (175B parameters) uses an embedding size of 12,288 dimensions.

The Self-Attention Mechanism:

A key component of transformers and LLMs is the self-attention mechanism, which allows the model to weigh the importance of different words or tokens in a sequence relative to each other. This mechanism enables the model to capture long-range dependencies and contextual relationships within the input data, enhancing its ability to generate coherent and contextually relevant output.

Variants of the transformer architecture:

- BERT (Bidirectional Encoder Representations from Transformers) - "designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers."

- GPT (Generative Pretrained Transformers) - "primarily designed and trained to perform text completion tasks."

BERT receives input where some words are missing and then attempts to predict the most likely word to fill each of those blanks. The original text can then be used to provide feedback to the model's predictions during training.

GPT models are pretrained using self-supervised learning on next-word prediction tasks.

Foundation models are called that because the are generalized with their pretraining and can then be fine-tuned afterward to specific tasks.

The Unreasonable Effectiveness of Recurrent Neural Networks

https://karpathy.github.io/2015/05/21/rnn-effectiveness/I recently started reading How to Build a Large Language Model (from scratch). Early in the introduction of the book they mentioned that the breakthroughs behind the current surge in LLMs is relatively recent. A 2017 paper (Attention Is All You Need) from various people including several at Google Brain introduced major improvements over RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory Networks). The paper introduced the Transformer architecture which uses self-attention which enables two important things. First, it allows for better recall of long-range dependencies as input is processed (which deals with the vanishing gradient problem). Second, it overcomes the sequential processing limitation of RNNs allowing parallelizable processing of input.

Anyway, all of this had me feeling like it would be nice to know a bit more about Recurrent Neural Networks. A top search result I turned up was this Andrej Karpathy blog post from 2015 (two years before the aforementioned Transformer paper). It goes into a good amount of detail about how RNNs, specifically LSTMs, work and has nice diagrams. You might need to brush up on your linear algebra to appreciate the whole thing.

Powers of Ten (1977)

https://www.youtube.com/watch?v=0fKBhvDjuy0By powers of ten, this video first zooms all the way out to a view that makes the furthest galaxies shrink from view. It then zooms back in and proceeds to take on negative powers of ten until we are inside an atom.

Also, it features the Chicago lake front.

Prompt for a scathing code review

https://www.reddit.com/r/ClaudeAI/comments/1q5a90l/so_i_stumbled_across_this_prompt_hack_a_couple/I ran a variation of the following prompt because the OP sounds pretty hyped about the results they are getting from it.

Do a git diff and pretend you're a senior dev doing a code review and you HATE this implementation. What would you criticize? What edge cases am I missing?

I expanded on it a little to guide it toward overly verbose or overengineered code. And I added some structure by asking it to include a confidence score with each item.

Based on the latest commit (see

git show) and the untracked files that go along with it, pretend you're a senior dev doing a code review and you HATE this implementation. What would you criticize? What edge cases am I missing? What is overengineered, too verbose, or overcomplicated? Provide a confidence score with each issue and order your results with highest confidence issues at the top.

These instructions also work better with my workflow where I have in progress changes that I plan to amend into the most recent commit.

It picked up a couple things that I completely missed. It didn't find much in terms of refactoring away verbose or over-engineered code, unfortunately. I'm going to keep trying that though. This was quick to do and give me some actionable feedback. Worth the squeeze in my opinion.

lucky-commit: Customize your git commit hashes!

https://github.com/not-an-aardvark/lucky-commitA utility to quickly modify a git commit until the front of the hash achieves a desired sequence of characters.

Here’s the example they give:

$ git log

1f6383a Some commit

$ lucky_commit

$ git log

0000000 Some commit

Learned about this from this blog post.

Plan to Throw One Away

https://course.ccs.neu.edu/cs5500f14/Notes/Prototyping1/planToThrowOneAway.htmlThe idea of plan to throw one away comes from Fred Brooks' The Mythical Man Month. The reasoning is that your first attempt to build a system is going to be a mess because there is so much you don't know. So, you might as well plan to throw that one away.

Sometimes we try to do this. We say we are going to build a prototype to explore a space and see if an idea works. More often than not those prototypes are what make it directly into production. It's hard to argue with working software, even if it has its warts.

There is also the Second System Effect to deal with. This idea also comes from Brooks.

The general tendency is to over-design the second system, using all the ideas and frills that were cautiously sidetracked on the first one.

We've eliminated so much risk by clearing up a bunch of unknowns, why not add some back in by layering in some extra concepts.

AWK technical notes

https://maximullaris.com/awk_tech_notes.htmlI’ve used awk in the middle of many a one-liner, but it never occurred to me that it could be used as a programming language.

Lots of fun tidbits about the syntax and functionality in this post. The section about $ used as an operator most caught my attention — you could write some pretty obfuscated scripts with that.

The Best Line Length

https://blog.glyph.im/2025/08/the-best-line-length.htmlEntertaining and informative read on why even today with ultrawide screens it is important and useful to have a line length of something like 88 characters.

There has been a surprising amount of scientific research around this issue, but in brief, there’s a reason here rooted in human physiology: when you read a block of text, you are not consciously moving your eyes from word to word like you’re dragging a mouse cursor, repositioning continuously. Human eyes reading text move in quick bursts of rotation called “saccades”. In order to quickly and accurately move from one line of text to another, the start of the next line needs to be clearly visible in the reader’s peripheral vision in order for them to accurately target it.

Plus I learned about the term “saccade”.

You should separate your billing from entitlements

https://arnon.dk/why-you-should-separate-your-billing-from-entitlement/The last two products I worked on benefited greatly from adding a separate concept of entitlements so that access to features could be mediated with more flexibility than a billing_status == 'active' check.

So herein lies the problem: if you ever want to make any change to your company’s offering, or if you find yourself expanding to new territories, you really should have a separate mechanism to handle entitlements.

In blunt terms:

- If you will change what features are included in a plan, you should have a separate entitlement system.

- If you think you won’t want to force customers onto new plans, you should have a separate entitlement system.

- If some features are optional add-ons, you should have a separate entitlement system.

- If you ever hope to expand to new countries and markets, you should have a separate entitlement system.

- If you want to let customers experience features separately from their billing status, you guessed it… You should have a separate entitlement system!

Assorted less(1) tips

https://blog.thechases.com/posts/assorted-less-tips/I love an article like this where a person demonstrates a bunch of niche features of a tool they are a power-user of. In this case less.

I didn’t know there was much more to do with less than pipe it a bunch of stdout or view a log file with it.

A couple features that stood out to me where:

- using -N and -n to toggle line numbers on and off

- doing successive filtering with multiple % pattern invocations

- pulling in and navigating multiple files (still not totally sure of the workflow I’d use this for)

How To Not Be Replaced by AI

https://www.maxberry.ca/p/how-to-not-be-replaced-by-aiYou don’t compete with AI.

You amplify yourself with it.

You become the expert who knows when to trust the machine and when to override it.

The market will pay a premium for people who can be trusted with high-stakes decisions, not people who can produce high volumes of low-stakes work.

Your job is to deliver code you have proven to work

https://simonwillison.net/2025/Dec/18/code-proven-to-work/To master these tools you need to learn how to get them to prove their changes work as well.

I’ve been using Claude Code to automate a series of dependency upgrades on a side project. I was surprised by the number of times it has confidently told me it fixed such-and-such issue and that it can now see that it works, when in fact it doesn’t work. I had to give it additional tests and tooling to verify the changes it was making had the intended effect.

My surprise at this was because the previous several upgrade steps it would deftly accomplish.

Almost anyone can prompt an LLM to generate a thousand-line patch and submit it for code review. That’s no longer valuable. What’s valuable is contributing code that is proven to work.

The step beyond this that I also view as table stakes is ensuring the code meets team standards, follows existing patterns and conventions, and doesn’t unreasonably accrue technical debt.

Rails’ Partial Features You (Didn’t) Know

https://railsdesigner.com/rails-partial-features/I'm a big fan of the way this article started with the basics and then layered in feature after feature in an approachable way, with examples that made me feel like I could apply these tips directly to my current codebase.

Though I knew most of this, it made for a useful refresher. I also liked how it connected the verbose versions (what I usually write) with the minimal, full-convention versions (e.g. rendering a partial collection with <%= render @users %>).

Both the layout and space_template options for a partial collection were new to me. TIL!

h/t Garrett Dimon

How to write a great agents.md: Lessons from over 2,500 repositories

https://github.blog/ai-and-ml/github-copilot/how-to-write-a-great-agents-md-lessons-from-over-2500-repositories/Put commands early: Put relevant executable commands in an early section: npm test, npm run build, pytest -v. Include flags and options, not just tool names. Your agent will reference these often.

I’ve seen big improvements in how agents do with my codebase when I include exactly what command and flags should be used for things like running the test suite.

Cover six core areas: Hitting these areas puts you in the top tier: commands, testing, project structure, code style, git workflow, and boundaries.

Covering these areas is a good starting point. I’m usually not sure where to start and only add things as needed.

Advice for good puzzle design

https://www.reddit.com/r/gamedesign/comments/qgoxql/comment/hi7v47o/Insightful list of tips on how to approach designing a puzzle. It's all very user-centric. The one that really stands out to me is: "A puzzle is not there to stump the player, it is there to be solved in a way that makes the player feel smart or skilled." That's what makes a puzzle fun and rewarding.

- Start by designing the most simple, basic puzzles to show players how the mechanics work. You can build from those basic examples to create more complex and difficult puzzles.

- Complexity and difficulty are not the same thing. A simple puzzle that works well is much more interesting than a very complex puzzle.

- Think about the aim of a puzzle. A lot of puzzle designers get this wrong. A puzzle is not there to stump the player, it is there to be solved in a way that makes the player feel smart or skilled.

- Misdirect the player, don't lie to them.

- Puzzles come from blocking the player. Think of how you can block off the obvious solution to make the player find an alternative

- Try deconstructing mechanics from popular games. How does Portal use the portals in different ways? How are portals and companion cubes combined to create puzzles?

- Play The Witness and think about how each section of the game teaches you how each sub-mechanic works by gradually forcing you to understand its different properties. That game is practically a meta exercise in showing how puzzles are created.

- Keep puzzles efficient. Don't force the player to spend ages doing the easy parts, and don't require the player to have pixel perfect movement or mad platforming skills (unless that's your game). Try to avoid puzzles that can get to an unsolvable state.

Conway's Law

https://martinfowler.com/bliki/ConwaysLaw.htmlIn Martin Fowler's words:

Conway's Law is essentially the observation that the architectures of software systems look remarkably similar to the organization of the development team that built it. It was originally described to me by saying that if a single team writes a compiler, it will be a one-pass compiler, but if the team is divided into two, then it will be a two-pass compiler. Although we usually discuss it with respect to software, the observation applies broadly to systems in general.

From Melvin Conway himself:

Any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization's communication structure.

I've read about Conway's Law before and I see it get brought up from time to time in online discourse. Something today made it pop into my brain and as I was thinking about it, I felt that I was looking at it with my head cocked to the side a little, just different enough that it helped me understand it a little better.

I tend to work on small, distributed software teams that work in an async fashion. That means minimal meetings, primarily high-agency independent work, clear and distinct streams of work, and everyone making their own schedule to get their work done.

I had been thinking about the kinds of things you need to have in place in your codebase and software system to make that way of working work well. A monolith is compatible with minimal, async communication because there aren't lots of distributed pieces that need coordinating. As another example, deploying things behind feature flags so that they can be released incrementally on a schedule separate from deployments also lends itself to this way of working.

The way this teams have decided to be organized and to communicate has a direct impact on how we develop the software system and what the system looks like.

Punycode: My New Favorite Algorithm

https://www.iankduncan.com/engineering/2025-12-01-punycode/I enjoyed reading about this algorithm because it demonstrates some clever tricks and problem solving. It's useful to read about new algorithms from time to time if for no other reason than as a source of inspiration to keep you actively thinking about unique was to solve problems.

A high level summary of what makes it so neat: the algorithm uses adaptive bias adjustment, variable-length encoding, and delta compression to achieve extremely dense (in the information-theoretic sense) results for both single-script domains (like German bücher-café.de or Chinese 北京-旅游.cn) and mixed-script domains (like hello世界.com). What makes it particularly elegant is that it does this without any shared state between encoder and decoder. The adaptation is purely a deterministic function of the encoded data itself. This is a really cool example of how you can use the constraints of a system to your advantage to solve a problem.

Security beyond the model: Introducing AI system cards

https://www.redhat.com/en/blog/security-beyond-model-introducing-ai-system-cardsIn practice, end users engage with systems, not raw models, which is why a single foundational model can power hundreds of tailored solutions across domains. Without the surrounding infrastructure of an AI system, even the most advanced model remains untapped potential rather than a tool that solves real‑world problems.

System Prompts - Claude Docs

https://platform.claude.com/docs/en/release-notes/system-promptsSimon Willison shared this addition to Claude’s system prompts:

If the person is unnecessarily rude, mean, or insulting to Claude, Claude doesn't need to apologize and can insist on kindness and dignity from the person it’s talking with. Even if someone is frustrated or unhappy, Claude is deserving of respectful engagement.

It’s very interesting to read through the full prompts to see all the different kinds of instruction they start with.

Anthropic CEO: AI Will Be Writing 90% of Code in 3 to 6 Months

https://www.businessinsider.com/anthropic-ceo-ai-90-percent-code-3-to-6-months-2025-3Around March 14th, 2025:

"I think we will be there in three to six months, where AI is writing 90% of the code. And then, in 12 months, we may be in a world where AI is writing essentially all of the code," Amodei said at a Council of Foreign Relations event on Monday.

Amodei said software developers would still have a role to play in the near term. This is because humans will have to feed the AI models with design features and conditions, he said.

Via this Armin Ronacher article, via this David Crespo Bluesky post.

Color Palette Pro

https://colorpalette.pro/This is a very cool color picker tool that has the look and feel of a physical piece of hardware like a synthesizer.

How I use Claude Code for real engineering

https://www.youtube.com/watch?v=kZ-zzHVUrO4One of the first tips from this video that jumped out at me was one of the first rules that shows up in Matt Pocock's system CLAUDE.md file.

- In all interactions and commit messages, be extremely concise and sacrifice grammar for the sake of concision.

My initial experience with Claude Code is that it is verbose, excessively so. To counteract that a bit, adding this as a top-level rule to Claude's memory seems very useful.

Another thing that Matt recommends having in CLAUDE.md as part of any planning mode work is:

- At the end of each plan, give me a list of unresolved questions to answer, if any. Make the questions extremely concise. Sacrifice grammar for the sake of concision.

This gives the human a chance to clarify things and make adjustments before finalizing the plan.

Later as Claude Code gets to the end of writing the first pass of a plan, Matt gets the sense that executing on the entire plan is going to completely overrun the context window. So, he instructs CC to:

make the plan multi-phase

Free, Open-Source Online database diagram editor and SQL generator

https://www.drawdb.app/When you search for a SQL / Database diagramming tool, most results are for products with a shallow free tier that then leads to a steep per-month subscription fee. I knew I had seen a free and open-source database diagramming tool at some point. After a bit more searching I came back across DrawDB.

Understanding Jujutsu bookmarks

https://neugierig.org/software/blog/2025/08/jj-bookmarks.htmlThis article explains how jj doesn't have the concept of a branch and how you instead can use bookmarks to achieve a similar effect and interop with git.

At the end of the article are several useful jj workflows. Such as "Workflow: working alone":

If you are just making changes locally and just want to push your changes to main, you must update the bookmark before pushing with a command like

jj bookmark set main -r @. This is currently the clunkiest part of jj. There have been conversations in the project about how to improve it.If you search for

jj tugonline you will see a common alias people set up to automate this.

This isn't the first time I've seen someone mention the jj tug alias.

ActiveSupport::CurrentAttributes

https://api.rubyonrails.org/classes/ActiveSupport/CurrentAttributes.htmlThis can be used to house request-specific attributes needed across your application. A prime example of this is Current.user as well as authorization details like Current.role or Current.abilities.

A word of caution: It’s easy to overdo a global singleton like Current and tangle your model as a result. Current should only be used for a few, top-level globals, like account, user, and request details. The attributes stuck in Current should be used by more or less all actions on all requests. If you start sticking controller-specific attributes in there, you’re going to create a mess.

What to do (and not do!) During Taper

https://maggiebowman.substack.com/p/what-to-do-and-not-do-during-taperThis article is a quick read with a list of "Dos" and "Don'ts" as you enter the final weeks of training (taper!) before your marathon.

Lots of great advice, much of what comes down to focus on what you can control and don't worry about what you can't. And trust your training!

Don't ask to ask, just ask

https://dontasktoask.com/Someone made an entire web page describing a pretty common communication anti-pattern that I see in every organization I work in.

You'll be heads down on a problem and then get a DM that says, "Can I ask you something?" or even just "Hey".

Now I have to make a call, based on very little info, whether to immediately break from my current stream of work or break from it when I come to a stopping point.

I could more effectively make that call with actual details on what the ask is. Or quickly point you to someone who can be of more help.

With "Can I ask you something?", the onus is now on me to ask follow up questions to pull the details of the ask out of the person.

This also intersects with another communication anti-pattern I see all the time -- asking a question as a DM when putting the question in a public channel will "expose more surface area" for the question to be answered.

The solution is not to ask to ask, but just to ask. Someone who is idling on the channel and only every now and then glances what's going on is unlikely to answer to your "asking to ask" question, but your actual problem description may pique their interest and get them to answer.

I don’t want AI agents controlling my laptop – Sophie Alpert

https://sophiebits.com/2025/09/09/ai-agents-securityThere’s no good way to say “allow access to everything on my computer, except for my password manager, my bank, my ~/.aws/credentials file, and the API keys I left in my environment variables”. Especially with Simon Willison’s lethal trifecta, you don’t really want to be giving access to these things, even if most of the time, nothing bad happens.

Jujutsu for everyone

https://jj-for-everyone.github.io/An introductory tutorial to jujutsu that is built to be accessible for someone who doesn’t have experience with Git.

If you are already experienced with Git, I recommend Steve Klabnik's tutorial instead of this one.

What Happened When I Tried to Replace Myself with ChatGPT in My English Classroom

https://lithub.com/what-happened-when-i-tried-to-replace-myself-with-chatgpt-in-my-english-classroom/Like many teachers at every level of education, I have spent the past two years trying to wrap my head around the question of generative AI in my English classroom. To my thinking, this is a question that ought to concern all people who like to read and write, not just teachers and their students.

Each student submitted a draft of writing on a common subject and when the teacher presented all the titles in aggregate, you could see a plagiaristic convergence.

I expected them to laugh, but they sat in silence. When they did finally speak, I am happy to say that it bothered them. They didn’t like hearing how their AI-generated submissions, in which they’d clearly felt some personal stake, amounted to a big bowl of bland, flavorless word salad.

Via conputer dipshit

Life Altering Postgresql Patterns

https://mccue.dev/pages/3-11-25-life-altering-postgresql-patternsUse UUID Primary Keys

I don’t have strong opinions on this one. Most of the systems I work on would do just as well with bigints as UUIDs. Now that v7 UUIDs are generally available, there is an even stronger argument to go that direction since they are sortable.

Give everything createdat and updatedat

I absolutely agree with this. These columns should be used not as app logic (except sorting), but for auditing and investigation purposes. I’ve always needed these at one point or another to look into some production data issue or support request.

on update restrict on delete restrict

It’s good to have default practices for this kind of thing, but absolutes aren’t that useful. Sometimes you want a cascade and as long as you’ve thought through the details, that may be the right choice.

Use schemas

The default public schema is great. Using alternative schemas usually means having to fight conventions in web frameworks like Rails. It might be worth it, but know that you’ll generally be cutting against the grain if you go this direction.

Enum Tables

Enum tables are a great pattern, but I would caution against using a text value as the primary key. Use some size of bit value that won’t change even if the text value does. That way you don’t have to make a bunch of updates in referencing tables.

Name your tables singularly

I see no benefit to singular naming. I prefer plural naming because of my time using Rails which establishes that as a convention. Whichever direction you go, make sure to be consistent.

Mechanically name join tables

I think this is a good practice to follow. Migration tools like Rails’ ActiveRecord often do this in a deterministic way for you. Occasionally it is nice to have a perfectly named join table based on domain, but you inevitably falter when trying to later remember what tables it joins. It’s a small thing, but consistency generally wins.

Almost always soft delete

This used to be my stance for any core application tables. However since listening to the Soft Deletes episode of Postgres.fm, I’ve realized it’s way more nuanced than I thought and you often don’t want soft deletes. It’s worth a listen.

…

How I keep up with AI progress

http://blog.nilenso.com/blog/2025/06/23/how-i-keep-up-with-ai-progress/To help with this, I’ve curated a list of sources that make up an information pipeline that I consider balanced and healthy. If you’re late to the game, consider this a good starting point.

Their top recommendation of what to follow if you were to only follow one thing is the same as mine — Simon Willison’s blog.

But don’t stop there, this article is jam packed with links to other people and resources in the AI and LLM space.

Python Resources for Experienced Developers

https://www.reddit.com/r/Python/s/i6Ep6OhDOuThese are three books recommended to read, in this order (but, of course, choose your own adventure):

Adding a feature because ChatGPT incorrectly thinks it exists

https://www.holovaty.com/writing/chatgpt-fake-feature/This developer noticed a bunch of people were being referred to their product by ChatGPT for a feature that doesn’t exist. They decided to meet the demand and build the feature.

Hallucination Driven Development!

Author also highlights the challenge of LLM hallucinations creating false expectations and disappointment about a product.

Human Interface Guidelines | Apple Developer Documentation

https://developer.apple.com/design/human-interface-guidelinesA friend mentioned that whenever he is making UI decisions that he doesn't already have strong opinions on, he likes to reference Apple's HIG (Human Interface Guidelines) to see what they have to say. It's comprehensive and full of hard-won UI and UX knowledge from a company with a long-term track record of making usable products for hundreds of millions of people.

Context engineering

https://simonwillison.net/2025/Jun/27/context-engineering/As Simon argues here, “prompt engineering” as a term didn’t really catch on. Perhaps “context engineering” better captures the task of providing just the right context to an LLM to get it to most effectively solve a problem.

It turns out that inferred definitions are the ones that stick. I think the inferred definition of "context engineering" is likely to be much closer to the intended meaning.

Making Tables Work with Turbo

https://www.guillermoaguirre.dev/articles/making-tables-work-with-turboThis article shows how to work around some gotchas that come up when using Turbo with HTML tables. The issue is that despite how you structure your HTML, some elements like <form> get shifted around or de-nested by the HTML rendering engine.

Some of the key takeaways:

- take advantage of the dom_id helper

- stream to an ID on a standard tag without necessarily having added a turbo_frame_tag

- use of remote form submission by specifying the form ID to the submit button's form attribute

Instantiate a custom Rails FormBuilder without using form_with

https://justin.searls.co/posts/instantiate-a-custom-rails-formbuilder-without-using-form_with/I was working with a partial that I wanted to stream to the page with Hotwire. This partial was rendering a form element that would be streamed inline to a form already on the page. The form input helper needs a Rails FormBuilder object in order to be instantiated properly. In the Rails controller where I'm streaming the partial as the response, I don't have access to the relevant form object. So, the rendering errors.

Then I found this article from Justin Searls describing a FauxFormObject which can help get around this issue. I made it available as a helper, referenced the instance in the partial, made sure the form element name followed convention, and then all was working.

GitHub - thoughtbot/hotwire-example-template: A collection of branches that transmit HTML over the wire.

https://github.com/thoughtbot/hotwire-example-templateThis is a GitHub repo I've seen recommended by Go Rails for learning and experimenting with different Hotwire features and concepts. Each branch is an example with working code to go through.

I learned about this repo from Go Rails video or one of the nearby ones.

The most underrated Rails helper: dom_id

https://boringrails.com/articles/rails-dom-id-the-most-underrated-helper/The dom_id helper allows you to create reproducible, but reasonably unique identifiers for DOM elements which is useful for things like anchor tags, turbo frames, and rendering from turbo streams.

If you are useful, it doesn’t mean you are valued

https://betterthanrandom.substack.com/p/if-you-are-useful-it-doesnt-meanIf you’re valued, you’ll likely see a clear path for advancement and development, you might get more strategic roles and involvement in key decisions. If you are just useful, your role might feel more stagnant.

I’m thinking of one role in particular where I was useful, but not valued. I was one of the more competent and senior people on the dev team, but I wasn’t part of strategic conversations and leadership wasn’t investing in me. I wanted to do important, valuable things, but it wasn’t an option. It was a bummer.

I was in a role earlier in my career where I kept getting more responsibilities and opportunities and significant raises, but it wasn’t until a couple jobs later that I realized how valued I was there. Part of why I didn’t realize is because leadership didn’t express it beyond the above.

This article was shared with me by Jake Worth.

AI didn’t kill Stack Overflow

https://www.infoworld.com/article/3993482/ai-didnt-kill-stack-overflow.htmlFor Stack Overflow, the new model, along with highly subjective ideas of “quality” opened the gates to a kind of Stanford Prison Experiment. Rather than encouraging a wide range of interactions and behaviors, moderators earned reputation by culling interactions they deemed irrelevant. Suddenly, Stack Overflow wasn’t a place to go and feel like you were part of a long-lived developer culture. Instead, it became an arena where you had to prove yourself over and over again.

This reminded me of a comment that a friend made to me about StackOverflow. Notably, he has been on the tough end of over-eager moderation.

I do think that the moderation changes were bad. I don't know what problems they were trying to solve, or if it worked. But I watched so many good-faith questions and answers get absolutely smacked down by the moderators… Not how I think beginners should be treated.

Related: StackOverflow is Almost Dead

Catbench Vector Search App has Postgres Query Throughput and Latency Monitoring Now

https://tanelpoder.com/posts/catbench-vector-search-query-throughput-latency-monitoring/The whole app is meant to be a learning tool, an easy way to run some vector similarity search workloads (together with other application data) and be able to see the various query texts, execution plans and plan execution metrics when you navigate around.

Why agents are bad pair programmers

https://justin.searls.co/posts/why-agents-are-bad-pair-programmers/Justin describes some of ways that LLM coding agents used as pair programmers can lead to bad patterns in pair programming. In particular, one person taking over while the other person disengages or becomes a rubber stamp for their changes.

He then throws out some ideas for ways that LLM coding assistants can be improved with what we’ve learned over the years from person-to-person pairing.

My preference is what he describes here:

throttle down from the semi-autonomous "Agent" mode to the turn-based "Edit" or "Ask" modes. You'll go slower, and that's the point.

One suggestion is something we can do now (out-of-band or at least front-loaded).

Design agents to act with less self-confidence and more self-doubt. They should frequently stop to converse: validate why we're building this, solicit advice on the best approach, and express concern when we're going in the wrong direction

Tell the LLM you want to approach things this way, that you want to break the problem down and tease out any issues with the proposed approach. Engage in some back and forth, have it generate a plan, and then go from there.

Understanding logical replication in Postgres

https://www.springtail.io/blog/postgres-logical-replicationWhat is replication in a Postgres database?

PostgreSQL database replication is a process of creating and maintaining multiple copies of a database across different servers. This technique is crucial for ensuring data availability, improving performance, and enhancing disaster recovery capabilities.

This page from the Postgres docs describes all the ways you can approach achieving high-availability and replication with Postgres, including considerations for the tradeoffs.

Physical Replication

Physical replication is the replication of the physical blocks of the Write-Ahead Log after changes are applied. It is beneficial for large-scale replication because it copies data at the block level, making it faster; the data does not have to be summarized as in logical replication. Additionally, physical replication supports streaming the WAL providing near real-time updates.

However, it replicates the entire database cluster, which includes all databases and system tables, making it less flexible for partial replication or replication of only a subset of databases or tables. Changes to the physical layout of data blocks, that may change across Postgres versions, can break compatibility.

Logical Replication

Logical replication exposes a logical representation of changes made within transactions, e.g., an update X was applied to row Y, rather than replicating the physical data blocks of the WAL. This allows for the replication of specific tables or databases, and supports cross-version replication, which makes it more flexible for complex replication scenarios.

However, logical replication can be slower for large-scale replication, requires more configuration and management, and does not support certain database modifications, such as schema changes like ALTER TABLE, and other metadata or catalog changes.

How Instacart Built a Modern Search Infrastructure on Postgres

https://tech.instacart.com/how-instacart-built-a-modern-search-infrastructure-on-postgres-c528fa601d54Unconventionally, they transitioned from Elasticsearch to Postgres FTS and Vector Search.

A key insight was to bring compute closer to storage. This is opposed to more recent database patterns, where storage and compute layers are separated by networked I/O. The Postgres based search ended up being twice as fast by pushing logic and computation down to the data layer instead of pulling data up to the application layer for computation. This approach, combined with Postgres on NVMEs, further improved data fetching performance and reducing latency.

AMA with Fedor Gorst (Pro Pool Player)

https://www.reddit.com/r/billiards/s/NAm2tuHspS“Usually the boring stuff is the most helpful.”

In response to a question asking essentially, “are there any core/fundamental drills for pool players to get better, akin to deadlifts and squats in lifting?”

Yes there are. For the most part single shot drills where you focus on fundamentals. Mighty X is one of them. Usually boring stuff is the most helpful.

AI-assisted Coding and the Jevons Paradox

https://lobste.rs/s/42qb2p/i_am_disappointed_ai_discourse#c_6sp5oiSimon Willison on whether LLMs are going to replace software developers and what these tools mean for our careers:

I do think that AI-assisted development will drop the cost of producing custom software - because smaller teams will be able to achieve more than they could before. My strong intuition is that this will result in massively more demand for custom software - companies that never would have considered going custom in the past will now be able to afford exactly the software they need, and will hire people to build that for them. Pretty much the Jevons paradox applied to software development.

I am disappointed in the AI discourse

https://steveklabnik.com/writing/i-am-disappointed-in-the-ai-discourse/It’s more that both the pro-AI and anti-AI sides are loudly proclaiming things that are pretty trivially verifiable as not true. On the anti side, you have things like the above. And on the pro side, you have people talking about how no human is going to be programming in 2026. Both of these things fail a very basic test of [is it true?].

Developers spend most of their time figuring the system out

https://lepiter.io/feenk/developers-spend-most-of-their-time-figuri-7aj1ocjhe765vvlln8qqbuhto/The first thing that jumps out to me from the 2017 study is that on average 20-25% of developer time is spent navigating 😲

This anecdotally explains to me why I find vim so empowering and why I get so frustrated while trying to get where I need to be in other tools like VS Code. Vim (plus plugins) is optimized for navigation, so I can fly from place to place where I need to read code, check a test, make an edit, create a new file. Whereas in other ideas I feel like I’m trying to run in a swimming pool.

elvinaspredkelis/signed_params: A lightweight library for encoding/decoding Rails request parameters

https://github.com/elvinaspredkelis/signed_paramsThis is a clever idea for a small gem — allow request query parameters to be encoded and decoded automatically in the controller to avoid exposing certain kinds of information. This uses Rails’ Message Verifier module.

I learned about this from Kasper Timm Hansen.

Tokyo Ramen Street – Top 3 To Visit

https://www.5amramen.com/post/tokyo-ramen-streetWe had a chance to check out Tokyo Ramen Street in the basement of Tokyo Station.

For me, it was the dipping ramen at Rokurinsha. One of the best bowls of ramen I’ve had over the past 3 weeks. Great noodles, rich broth, ultra-savory thick cut of pork. Only complaint is that broth and noodles could have been hotter from the start as everything was lukewarm halfway through.

Access Control Syntax

https://journal.stuffwithstuff.com/2025/05/26/access-control-syntax/Munificent Bob breaks down the different ways that many languages manage access control for entities in modules. That is, how do I tell the parts of a program that import a module from somewhere else in the program what is private and what is public?

Big Problems From Big IN lists with Ruby on Rails and PostgreSQL

https://andyatkinson.com/big-problems-big-in-clauses-postgresql-ruby-on-railsI always enjoy these detailed PostgreSQL query explorations by Andrew.

I’ve seen this exact “big in clause” issue arise in many production Rails systems. These are tricky because the queries are often generated by ActiveRecord and they work fine for small data. As your data grows over time or specific power users do their thing, these sneaky, slow IN queries will start to crop up. Since they only happen some times, they often go unnoticed or appear hard to reproduce.

Andrew presents a lot of good options for rewriting these queries in a way that allows the database to better plan and optimize the query.

Spaced Repetition Systems Have Gotten Way Better

https://domenic.me/fsrs/I’ve used various learning platforms with spaced repetition built in, so I’m familiar with that concept. New to me is this new scheduling algorithm — Free Spaced Repetition Scheduler.

Commenters on the lobste.rs thread mentioned Ebisu as another learning scheduling resource.

Why some of us like "interdiff" code review systems (not GitHub)

https://gist.github.com/thoughtpolice/9c45287550a56b2047c6311fbadebed2I learned about git’s range-diff from this article which is useful if you adopt this interdiff approach of evolving patch series. Rather than doing additive commits in response to reviews and CI feedback.

This is the essence of "interdiff code review." You

- Don't publish new changes on top, you publish new versions

- You don't diff between the base branch and the tip of the developer's branch, you diff between versions of commits

- Now, reviewers get an incremental review process, while authors don't have to clutter the history with 30 "address review" noise commits.

- Your basic diagnostic tools work better, with a better signal-to-noise ratio.

Short alphanumeric pseudo random identifiers in Postgres

https://andyatkinson.com/generating-short-alphanumeric-public-id-postgresI’ve had to add this style of identifier to many apps that I’ve worked on, e.g. for something like coupon codes. I end up with a bespoke solution each time, usually in app code. It’s cool to see a robust solution like this at the database layer by adding a couple functions.

Claude and I write a utility program

https://blog.plover.com/tech/gpt/claude-xar.htmlJust In Time manual reading

The world is full of manuals, how can I decide which ones I should read? One way is to read them all, which used to work back when I was younger, but now I have more responsibilities and I don't have time to read the entire Python library reference including all the useless bits I will never use. But here's Claude pointing out to me that this is something I need to know about, now, today, and I should read this one. That is valuable knowledge.

TypeID is a spec

https://push.cx/typeid-in-luaI’ve always been a fan of the way Stripe does identifiers (e.g. cus_12345abcde for a customer). I was recently thinking about what it would look like to do something similar in my own app. This post made me aware that TypeID is what this sort of thing is called and that a spec exists.

Concurrent locks and MultiXacts in Postgres

https://blog.danslimmon.com/2023/12/11/concurrent-locks-and-multixacts-in-postgres/When multiple transactions have no exclusive locks on the same row, the database needs a way of tracking that set of transactions beyond the single xmax value that initially locks the row.

Postgres creates a MultiXact. A MultiXact essentially bundles together some set of transactions so that those transactions can all lock the same row at the same time. Instead of a transaction ID, a new MultiXact ID is written to the row’s xmax.

Esoteric Vim

https://freestingo.com/en/programming/articles/esoteric-vim/I love learning about the weird, arcane, dusty corners of vim, so this is a fun read.

Via this article on lobste.rs

Your words are wasted

https://www.hanselman.com/blog/your-words-are-wastedYou are not blogging enough. You are pouring your words into increasingly closed and often walled gardens. You are giving control - and sometimes ownership - of your content to social media companies that will SURELY fail.

I’m trying to write here more and link to things I read here more in this spirit of sharing more thoughts and publishing them in the open where I own them.

Don't Guess My Language

https://vitonsky.net/blog/2025/05/17/language-detection/Every browser sends an Accept-Language header. It tells you what language the user prefers, not based on location, not based on IP, based on their OS or browser config. And yes, users can tweak it if they care enough.

It looks like this: Accept-Language: en-US,en;q=0.9,de;q=0.8

That’s your signal, use it. It’s accurate, it’s free, it’s already there, no licensing, no guesswork, no maintenance.

As an example of when the location associated with an IP address is a reasonable heuristic: many course selling platforms use that to determine what if any Purchase Power Parity (PPP) discount to offer.

Japan’s IC Cards are weird and wonderful

https://aruarian.dance/blog/japan-ic-cards/It’s serendipitous that this article landed on the front page of lobste.rs as I’m in Japan for the first time this week and I’ve been using both a physical suica card and suica in iPhone wallet. I’m an avid CTA rider in chicago. I can easily tell how much faster these IC cards scan. I expect to have to stutter step as I scan to account for the delay, like on CTA, but these IC scanners are near instant.

Sync Engines

Over the last year or so I’ve seen several sync engines pop up as a paradigm shift in how we think about client and server data.

TanstackDB which is a generalized client but was built in partnership with ElectricSQL is described as:

A reactive client store for building super fast apps on sync

TanStack DB extends TanStack Query with collections, live queries and optimistic mutations that keep your UI reactive, consistent and blazing fast 🔥

Stop using REST for state synchronization

https://www.mbid.me/posts/stop-using-rest-for-state-synchronization/What struck me is how incredibly cumbersome, repetitive and brittle this programming model is, and I think much of this is due to using REST as interface between the client and the server. REST is a state transfer protocol, but we usually want to synchronize a piece of state between the client and the server. This mismatch means that we usually implement ad-hoc state synchronization on top of REST, and it turns out that this is not entirely trivial and actually incredibly cumbersome to get right.

Writing that changed how I think about PL

https://bernsteinbear.com/blog/pl-writing/I’m a big fan of these kinds of collections of blog posts and resources. The kind that I’m used to coming across is Essays on programming that I think about a lot.

turbopuffer

https://turbopuffer.com/docsturbopuffer is a fast search engine that combines vector and full-text search using object storage, making all your data easily searchable.

Turbopuffer is used by Cursor, Linear, Notion, and others to do improved search across large document sets by combining vector and FTS.

I learned about it from this article on how Cursor uses Merkle Trees for Indexing Your Codebase.

Reservoir Sampling

https://samwho.dev/reservoir-sampling/Reservoir sampling is a technique for selecting a fair random sample when you don't know the size of the set you're sampling from.

Should I Use A Carousel?

https://shouldiuseacarousel.com/The short answer is "no".

Want to read further? The site has a carousel you can click through with pull quotes from various studies done that have found them to be very ineffective.

via Sara Soueidan

CleanShot X and Retrobatch

https://leancrew.com/all-this/2025/01/cleanshot-x-and-retrobatch/Retrobatch (which I’m just now learning about) is a tool that appears to let you define pipelines of custom steps for processing images.

In this post, the author demonstrates a workflow (droplet?) for adding a background and drop shadow with CleanShot X and than trimming the edges down with Retrobatch.

This tool looks powerful as you can link shell, apple, etc. scripts into your workflows.

I came across this post while reading this one: https://bjb.dev/log/20250129-low-res-screenshots/

Rate Limiting Using the Token Bucket Algorithm

https://en.wikipedia.org/wiki/Token_bucketI was curious what it looked like to do metered access to a resource. Commonly when you talk about this topic, that resource is your own API that has a throughput ceiling. I was coming at this from the angle of an app with internal 3rd-party API calls that charge on a per-request basis. In that scenario I'd like to implement some level of spend control so that I don't wake up to a huge bill.

The Token Bucket Algorithm appears to be one common answer to this question.

Each consumer (maybe that is a user) of the app/API has a bucket of tokens and each token can be redeemed for one access of the limited resource. Each bucket can only fit so many tokens and you can decide how exactly you want to meter refilling the bucket. I think it is typically handled by periodically (e.g. "every X seconds") adding a token to each bucket that isn't full. The other extreme could be requiring a user to manually "refill" the bucket — i.e. recharge their account with more credits. Perhaps you may even want to mix in a heuristic that is guided by some global value like "% of max spend for the period."

I like the carnival analogy for the Token Bucket Algorithm described here: https://www.krakend.io/docs/throttling/token-bucket/#a-quick-analogy

Never lose a change again: Undo branches in Vim

https://advancedweb.hu/never-lose-a-change-again-undo-branches-in-vim/Vim uses a tree instead of a list when tracking your change history. That means even if you undo some changes and make a new change, you can still get back to the changes you originally undid. In a traditional editor/IDE with a list representation, that change would be lost.

This blog post has visuals to make it easier to understand the concept.

Notes, not a blog – David Crespo

https://crespo.business/posts/notes-not-a-blog/I’ve never managed to get a blog going, but I take a ton of notes for myself. So this time I’m trying the mental hack of calling these posts “notes” and writing about the most mundane things that could still be interesting to another person.

Same for me. Ever since I started writing “blogmarks” which have evolved more into what I’d consider notes, I find myself posting all the time. Whereas a blog post feels daunting, I get halfway through writing it, and then give up.

This is the rationale that has made it relatively easy for me to write so many TILs over the years.

BashMatic - BASH/DSL helpers for the rest of us.

https://bashmatic.dev/A powerful BASH framework with 900+ helper functions to make your BASH scripting easier, more enjoyable, awesome looking, and most importantly, fun.

With Claude lowering the barrier to try things out, I’ve been using it to write all kinds of one-off bash scripts to help with various day-to-day things. I notice common patterns arise and certain nice-to-haves that are missing. It made me wonder if there was a library of bash scripting utility functions. Sure enough. Very cool project!

Eisvogel Pandoc Template — GitHub

https://github.com/Wandmalfarbe/pandoc-latex-templateA clean pandoc LaTeX template to convert your markdown files to PDF or LaTeX. It is designed for lecture notes and exercises with a focus on computer science.

I've only ever generated super-bland PDFs from markdown using Pandoc. I'm pleasantly surprised to see that you can get such visually nice results starting from a markdown document (with some frontmatter).

I learned about this from Hans Schnedlitz's post of running Pandoc from Docker.

Bloom filters - Eli Bendersky

https://eli.thegreenplace.net/2025/bloom-filters/Great explanation, visuals, and implementation walkthrough in Go of Bloom Filters.

Fast(er) regular expression engines in Ruby

https://serpapi-com.cdn.ampproject.org/c/s/serpapi.com/blog/faster-regular-expression-engines-in-ruby/amp/A comparison of regular expression engines for Ruby — useful if you’re using regex at scale.

Grep by example: Interactive guide

https://antonz.org/grep-by-example/grep is the ultimate text search tool available on virtually all Linux machines. While there are now better alternatives (such as ripgrep), you will still often find yourself on a server where grep is the only search tool available. So it's nice to have a working knowledge of it.

There is a mini book, a playground, and interactive examples of practical ways to use grep.

Speaking in Tongues: PostgreSQL and Character Encodings

https://thebuild.com/blog/2024/10/27/speaking-in-tongues-postgresql-and-character-encodings/The character encoding decision is an easy one: Use UTF-8. Although one can argue endlessly over whether or not Unicode is the “perfect” way of encoding characters, we almost certainly will not get a better one in our lifetime, and it has become the de facto (and in many cases, such as JSON, the de jure) standard for text on modern systems.

Expand and Contract Pattern

The Expand and Contract pattern is a general database schema migration pattern to minimize locking and downtime that occurs when irreversibly modifying a production database schema. This is also known as the Parallel Change pattern.

Certain schema changes and operations can involve full table rewrites and other locking that cannot be tolerated by a system with production workloads. These can sneak up on us in production if we don't know about them because the same schema changes against non-production data may be instantaneous.

This pattern is necessary for something as simple as set not null on an existing column. You'd use this pattern to migrate a primary key from int to bigint. This would also be used for more complex operations like splitting out and normalizing a monolithic table to multiple tables or retroactively partitioning a table.

So, what is the "Expand and Contract" pattern?

There is no exact recipe. Each migration can require devising a custom approach. The general pattern holds and looks like this:

1. Expand the schema

Expand the existing schema to accommodate the "new" thing. This is often adding a new column or table which we will eventually swap to.

2. Sync write operations

Ensure write operations are kept in sync between the existing and "new" thing. This can either be handled by setting up database triggers now or by updating app code in the next step to write both places.

3. Expand application code interface

Update all application code to start writing to the "new" column or table. If you didn't set up triggers in the previous step, then you'll need the app code to also continue to write to the existing column or table.

4. Backfill new schema

Backfill the "new" column or table with all the existing data. Depending on the nature of the migration some sort of normalization or default value may need to be applied for certain records during this operation.

5. Cut reads over to new schema

At this point, the app is still reading from the existing schema. If this is an in-place migration, then this step may primarily be a transaction double-renaming so that the app is still referencing the same-named column or table, but in actuality is the "new" entity. The works if you're using triggers to stay in sync which you'll update or remove in this same transaction. Be more careful if the app is still writing both places.

Alternatively, you may opt for deploying app changes that cut all reads over to the new schema. No app code should read from the existing schema at this point, though some sync code may still be in place.

6. Ensure app works as expected

Beyond any automated testing that was enforced during previous steps, you'll want to thoroughly smoke test your app. It is now running on the new schema, reading migrated, backfilled data. Is everything looking good and checking out?

7. Remove writes to existing entities

If you haven't already, this is the time to remove any triggers or app code that are still syncing old and new entities now.

8. Contract the schema

We no longer need to old column or table that we started with. It is already growing out of date as our system continues to field write operations. The column or table can now be dropped.

And that's it. That's generally how to apply the Expand and Contract pattern.

Note: you should definitely take a backup before getting started with this work or more surgically, right before you cut reads over.

There is a great resource from Prisma on this as well: Using the expand and contract pattern | Prisma's Data Guide

What is a tool?